Google Doubles Down on Getting Rid of Offensive Search Predictions

The company is widening its definition of "inappropriate."

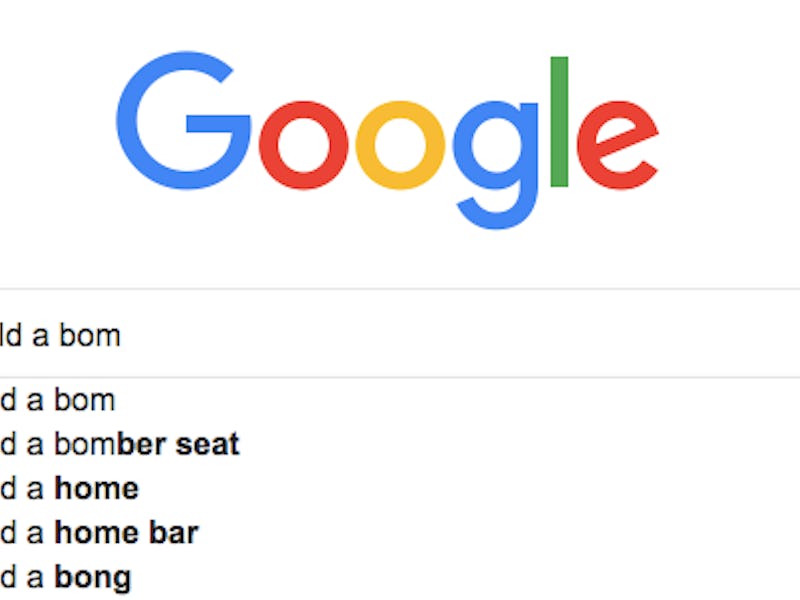

Have you ever wondered why certain phrases come up when you start to type a search query into Google? Probably, because occasionally the predictions are hilarious, if not bizarre. They’ve also been known to be highly offensive, and that is something Google is actively trying to change.

In a blog post on Friday, Public Liaison for Search Danny Sullivan revealed more about Google’s autocomplete function, and how the company is doubling down on what it’s calling “inappropriate predictions.”

Search predictions are algorithmically created phrases meant to save users some typing time. Sullivan says Google predicts what you’re about to type based on common searches, as well as your location and your search history. Of course, the world is not a perfect place, and racists and misogynists use Google too, leading to reported predictive results such as, “white supremacy is good.”

Last year, Google launched a feedback tool to allow users who experienced offensive predictions to report them. Building on the data they gathered from that, the platform has decided to expand its criteria of what is hateful:

Our existing policy protecting groups and individuals against hateful predictions only covers cases involving race, ethnic origin, religion, disability, gender, age, nationality, veteran status, sexual orientation or gender identity. Our expanded policy for search will cover any case where predictions are reasonably perceived as hateful or prejudiced toward individuals and groups, without particular demographics.

Google is also honing in on “violent” predictions, further expanding its definitions:

As for violence, our policy will expand to cover removal of predictions which seem to advocate, glorify or trivialize violence and atrocities, or which disparage victims.

This is probably a good idea. Social media giants like Facebook and Reddit have come under fire for not adequately safeguarding against hate speech. Considering that search predictions are culled from what users are searching, Google has a similar responsibility to limit the spread of bad actors.

Google refuses to fill in the blanks.

Its proven to be an uphill battle. Even back in February, Wired’s Issie Lapowsky wrote of finding offensive results to to search queries done incognito, from the term “feminists are” resulting in “feminists are sexist” to “Islamists are,” resulting in, “Islamists are not our friends,” and “Islamists are evil.” Derp.

After doing some cursory searches today, it appears that Google has done away with a lot of the predictive results ending in “are” entirely. Searching in incognito mode, this writer found that no predictive results show up at all if you type in “Gays are,” or “Black people are,” for example. When it comes to Google’s autocomplete, perhaps silence is golden.