This Robot Has Learned How to Say 'No' to Human Demands

Robotics researchers are trying to build common sense for A.I.

Robots, just like humans, have to learn when to say “no.” If a request is impossible, would cause harm, or would distract them from the task at hand, then it’s in the best interest of a ‘bot and his human alike for a diplomatic no, thanks to be part of the conversation.

But when should a machine talk back? And under what conditions? Engineers are trying to figure out how to instill this sense of how and when to defy orders in humanoids.

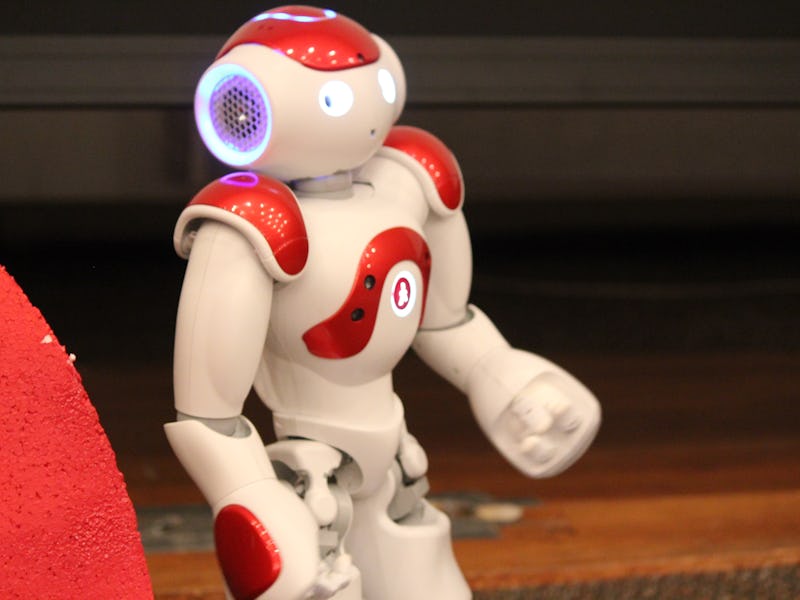

Watch this Nao robot refuse to walk forward, knowing that doing so would cause him to fall off the edge of a table:

Simple stuff, but certainly essential for acting as a check on human error. Researchers Gordon Briggs and Matthias Scheutz of Tufts University developed a complex algorithm that allows the robot to evaluate what a human has asked him to do, decide whether or not he should do it, and respond appropriately. The research was presented at a recent meeting of the Association for the Advancement of Artificial Intelligence.

The robot asks himself a series of questions related to whether the task is do-able. Do I know how to do it? Am I physically able to do it now? Am I normally physically able to do it? Am I able to do it right now? Am I obligated based on my social role to do it? Does it violate any normative principle to do it?

The result is a robot that appears to be not only sensible but, one day, even wise.

Notice how Nao changes his mind about walking forward after the human promises to catch him. It would be easy to envision a different scenario, where the robot says, “No way, why should I trust you?”

But Nao is a social creature, by design. To please humans is in his DNA, so given the information that the human intends to catch him, he steps blindly forward into the abyss. Sure, if the human were to deceive his trust, he’d be hooped, but he trusts anyway. It is Nao’s way.

As robot companions become more sophisticated, robot engineers are going to have to grapple with these questions. The robots will have to make decisions not only on preserving their own safety, but on larger ethical questions. What if a human asks a robot to kill? To commit fraud? To destroy another robot?

The idea of machine ethics cannot be separated from artificial intelligence — even our driverless cars of the future will have to be engineered to make life-or-death choices on our behalf. That conversation will necessarily be more complex than just delivering marching orders.