Facebook's "Supreme Court" Faces Skepticism Amid an Ugly Record of Transparency

Trust is not one of Facebook's fortes.

Facebook’s proposed oversight board for content moderation has quickly been dubbed the Facebook “Supreme Court,” but unlike the nine-member body that decides on U.S. laws, Facebook’s highest court will face a trust problem from thed very beginning.

On Thursday, Facebook released a report on its solicitation of public feedback about what it is officially calling an Oversight Board, which will be established “for people to appeal content decisions through an independent body.”

Responses from more than 2,000 people from 88 countries — out of the more than 2.38 billion Facebook users — reflect just how far Facebook has to go in the process.

Between respondents conveying skepticism about Facebook, or its poor treatment of content moderation, or the company’s status as one of the largest policiers of speech on the planet, it’s clear Facebook hasn’t escaped its past scandals.

3. Facebook’s Own Research Shows How Skeptical People Are

The report divides user feedback in areas like how cases would be selected, who would oversee them, and whether the oversight board could realistically exist independently from Facebook. This last question raised some interesting responses.

“Many of those who engaged in consultations expressed a degree of concern over a Facebook-only selection process, but the feedback was split on an alternative solution,” the report reads. “An intermediate ‘selection committee’ to pick the Board could ensure external input, but would still leave Facebook with the task of ‘picking the pickers.’”

Facebook found that many people didn’t want Facebook involved in the selection of the board, or board members to select their own replacements, as this could result in a “perpetuation of bias.”

In other words, the feedback gave Facebook a lot of information to work with, just maybe not the kind they were looking for.

2. There Is Already a Lack of Transparency Around Content Moderation and the Moderators

Facebook already has content moderators. About 15,000 of them. The Oversight Board is intended to review high-profile content decisions, which does nothing to address the opaque policies around Facebook’s moderation practices and less to address the harm it causes those who do it.

Numerous outlets have documented instances of PTSD and substance abuse among moderators, as well as fights, and even deaths, at content moderation facilities.

Cover of Facebook's report.

On Thursday, the Washington Post reported that a content moderator for Facebook had been fired for posting Bruce Springsteen lyrics on an internal message board to protest working conditions.

When trying to get a comment on the story, Facebook referred the Post to Accenture, which ran the moderation site. Accenture declined to comment. Facebook then declined to comment on the letter which was sent to management by the content moderators asking for improved working conditions.

If this is the model of transparency around Facebook content moderation, it’s hard to see how an Oversight Board would be much different.

1. Facebook Will Remain the Biggest Policer of Speech in the World - Not the Board

Facebook is already one of the largest, if not the largest, policers of speech in the world, and has huge reach and control over what content stays up. Content moderators, for better or worse, make sure of it.

Laptop with screen partially shut.

The Bonavero Institute of Human Rights at the University of Oxford reviewed Facebook’s previous charter for the board, and essentially saw the model as flawed. Proposed as a board that would review high-profile content moderation pieces through an appeals process, they argue the board should focus on systemic outcomes. The report noted that the moderation process itself was flawed and prone to mistakes. It also argued that it should have the power to force Facebook to address weaknesses, something that is still unclear in theory and less so in practice.

Earlier this year, Corynne McSherry, Legal Director at the Electronic Frontier Foundation, specializing in free speech issues, wrote about social media councils in relation to Facebook’s Oversight Board, questioning whether they might work.

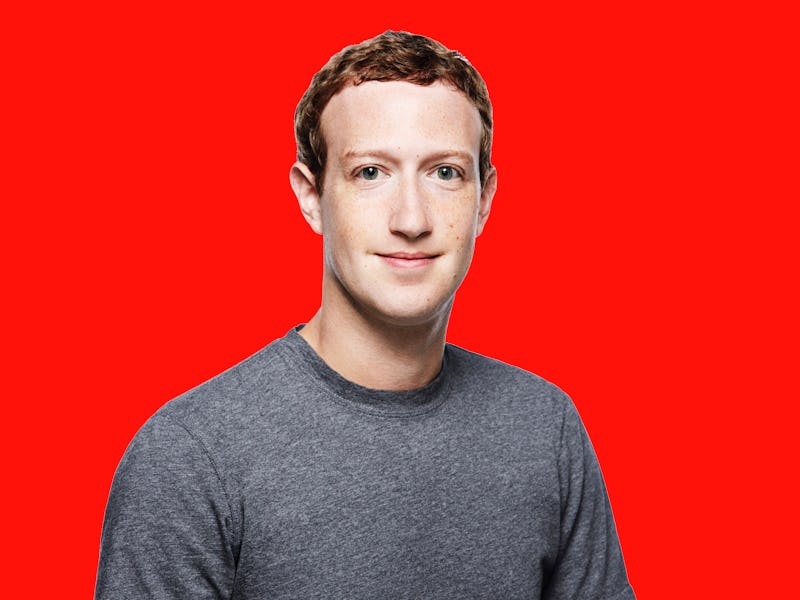

Zuckerberg on Facebook Live.

“Our biggest concern is that social media councils will end up either legitimating a profoundly broken system (while doing too little to fix it) or becoming a kind of global speech police, setting standards for what is and is not allowed online whether or not that content is legal,” McSherry contends in this blog post. “We are hard-pressed to decide which is worse.”

Facebook’s population of users, development of a “Supreme Court,” and announcement of an international currency shows it wants all the power of being a country, without the direct responsibilities that includes. Facebook’s new research shows just how skeptical of that prospect people are.