What if first contact with extraterrestrial life isn’t a UFO landing in the middle of Central Park, but rather just a message sent across the stars? What might be hidden inside that alien communication?

Astronomers Michael Hippke at Germany’s Sonneberg Observatory and John Learned of the University of Hawaii pondered this scenario, and they argue persuasively that there’s just no way to know if there’s something malicious lurking in that message, and that there’s no way to contain such an A.I. once the message is read.

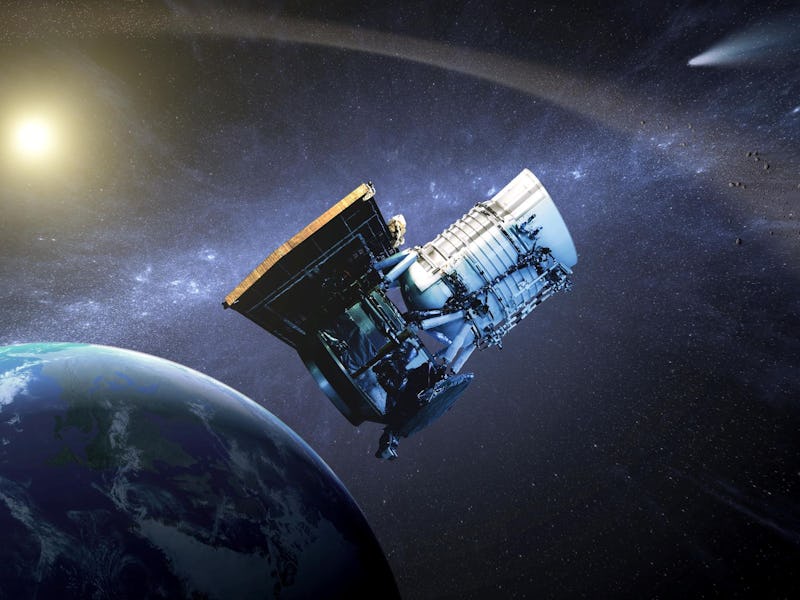

Join our private Dope Space Pics group on Facebook for more strange wonder.

The paper, which was recently posted on the preprint site arXiv, explains the fundamental problem is that of code. A very simple message of plain text, visuals, or audio could likely be judged as safe just by printing it out and looking at it manually, but anything more complicated than that would require a computer to process it. There’s no way to know if harmful or malicious code is in there until our program runs it.

Yes, there are safeguards we could put in place. The authors suggest a quarantined computer located on the moon, with remote-controlled fusion bombs primed to go off at the first sign of trouble. The message is kept totally secret from the outside world, with only a small team of government researchers and staff aware of what’s going on.

The A.I.-free Arecibo message, which humans sent across the universe in 1974.

But if there’s a sentient A.I. inside the code that’s more intelligent than we are — and that’s not implausible, given the aliens behind it would have to be considerably more advanced than us to send it in the first place — then it’s only a matter of time before it gains the upper hand.

“The AI might offer things of value, such as a cure for cancer, and make a small request in exchange, such as a 10 percent increase in its computer capacity,” the authors write. “It appears rational to take the offer. When we do, we have begun business and trade with it, which has no clear limit. If the cure for cancer would consist of blueprints for nanobots: should we build these, and release them into the world, in the case that we don’t understand how they work? We could decline such offers, but shall not forget that humans are involved in this experiment.”

Even if, as the authors suggest, it takes playing on the psychology of a guard with a sick child for the A.I. to find a way to escape, it will find one eventually. Besides, nothing stays secret forever, and could any government, be it democratic or dictatorial, hope to keep an intrigued public away from an alien intelligence forever?

As soon as a human computer reads the alien message, it’s only a matter of time before the A.I. inside it becomes the dominant intelligence on Earth. Given we only control the planet because we currently occupy that position, we would just have to hope the alien program is benevolent.

And, in fairness, the authors themselves are optimistic.

“We may only choose to destroy such a message, or take the risk,” they write. “It is always wise to understand the risks and chances beforehand, and make a conscious choice for, or against it, rather than blindly following a random path. Overall, we believe that the risk is very small (but not zero), and the potential benefit very large, so that we strongly encourage to read an incoming message.”