Google’s Arts and Culture App Recognizes Faces a Lot Like Humans Do

The brain and the algorithm are more similar than we think.

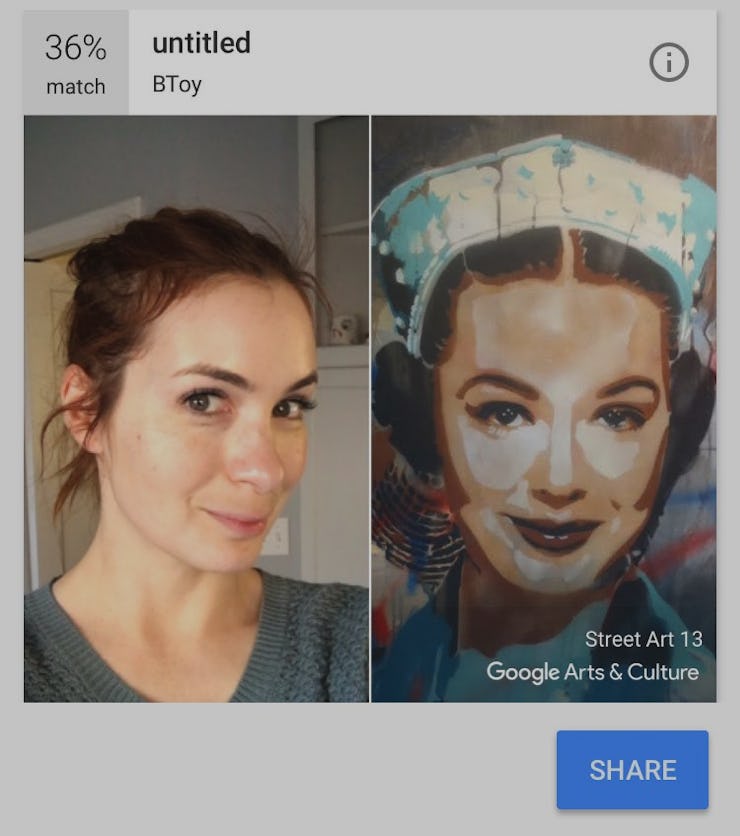

The new face match feature on Google’s Arts and Culture mobile app became a wild viral sensation on social media over the weekend, with users famous and obscure using the app to figure out what kinds of famous portraits they most look like. Although the app is driven by smart technology, it turns out it’s not way too different from how the human brain recognizes faces on its own accord.

The face-matching app is driven by an algorithm that uses an image of a face and pinpoints the most unique attributes and facial elements. It then works to match as best as it can those elements with ones found in one of the thousands of art museums the Arts and Culture app has access to. Making the matches is not an easy a process as it might sound, but Google’s facial recognition software have definitely made big strides over the last few years. But at its core, this software has to be taught and trained until its ready for practical use.

When it comes to humans, facial perception is much less of a learned process. Face perception is built into our own neurology — recognizing another human being, deciphering what they’re thinking or feeling based on their expressions, assessing who they are and that they’re up to, and much more. Specific neurons fire and regions of the brain light up when our eyes are confronted with someone’s face. The inability to recognize faces is actually a disorder known as prosopagnosia.

The overall process for facial recognition by both an algorithm and a brain requires a division of labor. Both mechanisms deconstruct an image, then build it back up to recognize the patterns that suggest something is a face.

The brain works first by breaking down the image of a face into its constituent parts — the eyes, the nose, mouth, and forehead — and reorienting them so that it has a general sense of the size and shape of a face, as well as the realization that it is indeed a face. The left hemisphere comes up with the general recognition of the face, while the right hemisphere makes the more nuanced distinctions that determine fine features. It’s thanks to the left brain that you know you’re looking at a human face, and thanks to the right brain that you know whose face that might be. And each of those parts use individual neurons to push forward the process so it feels like an instant event in our own heads.

For a facial recognition algorithm, the process is largely the same. The software determines the size and orientation of a face, then moves in on finer features like eyes, nose, and mouth that determine what that face looks like. Scientists call this a “faceprint,” and an algorithm like the Arts and Culture app can use this faceprint to compare with other faceprints made from artistic portraits.

The limitations of the algorithm, however, are myriad. Facial recognition software can run into problems caused by something as simple as lighting. It can’t always determine emotion (although it’s getting better). And these algorithms certainly don’t possess the speed brains do.

Still, as far as the process goes for the Arts and Culture face-matching feature, the facial recognition software isn’t half-bad. And the percent of match it applies to each result indicates that it’s an imperfect process, so it’s hard to get upset or confused if you find yourself thinking you look nothing like that portrait of an old Greek grampa from the 19th century.

See, I ain’t mad.

Check out this video on how to use the Google Arts and Culture app.