9 Ethical Dilemmas We Weren't Ready for Tech to Dump on Us in 2016

This year's progress gave rise to a number of moral head-scratchers.

For all its painful celebrity deaths, tempestuous elections, and global humanitarian crises, 2016 was also a year of looking ahead. With self-driving cars right around the corner and a real-life expedition to Mars in the making, 2016 saw vast improvements to everything technological, such as smartphones and artificial intelligence. But, as has been the case with any scientific progress throughout history, a pantheon of new moral and ethical questions have arisen from these developments. With a great many of them still unanswered, 2017 may prove to be a year of soul-searching and avant-garde values as we decide how life should be steered in our increasingly technological world.

Here are nine such ethical dilemmas that we still have yet to solve:

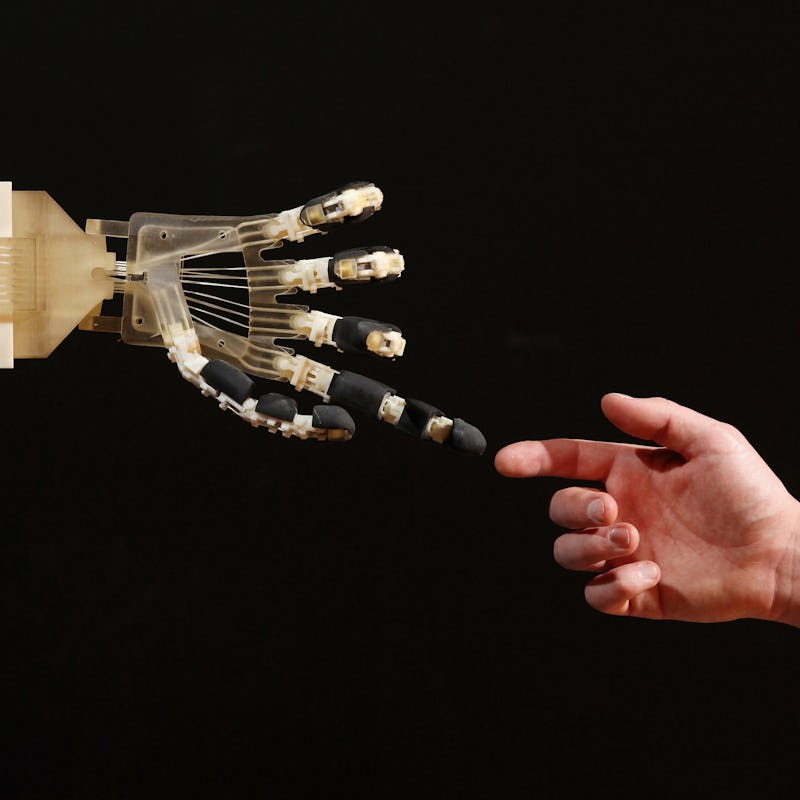

Are robots ever going to be alive?

With artificial intelligence programs passing the Turing test and HBO’s grand slam of a new show, Westworld, full of self-aware robots, it’s safe to say that questions asking what it truly means to be alive and conscious are on lots of people’s minds. Does meeting certain benchmarks signal life? If so, we’re already there. Does it have to do with trauma, as Westworld’s Dr. Ford seems to think? Is there a spiritual quality to it, something intangible that can never be possessed by a man-made machine, no mater how convincing?

Whatever answer we decide on will have far-reaching implications, even stretching into the realm of things like civil liberties for A.I. and human rights for androids — if indeed you still want to call them human rights. One thing’s for sure: If the hosts in Westworld are alive, then that park sure as hell isn’t legal.

Can robots give consent?

Riding right on the heels of whether or not they’re alive are more detailed questions about how people in society will be expected to interact with robots. Thanks again to Westworld, as well as the emergence of creepily realistic talking sex robots, sex is a type of contact where boundaries are as-yet-undefined. As we become more conformable with sex in all its forms, boundaries are sure to be pushed; exploration is sure to be desired. Eventually, we must confront the question of if it is indeed possible to rape a robot.

If you’re the type to believe they can never be alive, then probably not. But what if society, collectively, decides they can be sentient? Might robots be able to choose or not choose sex work in the same way that real people do? Would there be limitations on the level of sentience a robot can have if its primary function is sex? Who will set those limits? How alive is too alive for us to have our way with them? There are plenty of discussions that will be had concerning ethics when it comes to sex robots.

How do we make self-driving cars moral?

Continuing along with the theme of artificial intelligence is the introduction of self-driving cars into the market. Safer in many ways than human drivers, automated vehicles can predict crashes and other events that humans cannot, and act to avoid or prevent them. The real dilemma, however, arises in situations where there is no avoiding a collision. The self-driving car can still make decisions about how to enter that collision, but what decisions should it make?

That exact question is what the Moral Machine project at MIT is looking to answer. The program (which consists of a compelling survey) is “a platform for gathering human perspective on moral decisions made by machine intelligence.” The survey presents you with various scenarios in which you must choose (on behalf of the self-driving car) who lives and who dies in the oncoming crash. It gives you criteria like age, gender, body type, and social value on which to base your decisions. Do you save the doctor over the criminal, the passenger over the pedestrian, the young over the elderly? These are distinctions that a human driver likely wouldn’t have the time or data to make. But a self-driving car might, and it’s going to have to decide.

Is Facebook ruining America?

No, this is not a question about the nuances of social interaction versus isolation on social media. While still unsolved, that dilemma has been around for a bit longer. The newer controversy surrounding Facebook in 2016 had to do with the prevalence of fake news stories. With regards to the presidential election, it was actually more popular than real news. Fake news has been accused by many of handing Donald Trump the presidency, and by President Obama of impeding progress in fighting climate change.

The question, then, is what to do about it? Facebook CEO Mark Zuckerberg, himself, has appeared conflicted on the issue, acknowledging the problem with misinformation campaigns, but poo-pooing the idea that it’s had a measurable impact on the election. In any case, this is shaping up to be a debate about whether outright lies spread on the internet fall under the category of protected speech, and if they can/should be censored. As of right now, Germany seems to think they should.

Can Twitter ban the President of the United States?

In the coming months, Twitter may find itself in uncharted waters as the incoming president’s habit of tweeting offensive rhetoric may run him afoul of the platform’s hateful conduct policy. In some cases, the policies themselves that Trump has advocated for, such as a Muslim registry or re-instituting torture practices, may violate the policy. Add to that the fact that Twitter was not invited to a meeting between Trump and other tech leaders, and it creates a scenario where a sitting president could get banned from social media.

In theory, there’s no law preventing Twitter from banning Trump. But there remains the ethical quandary of whether or not knowingly insulating the president, by taking him off a platform where he can engage with the public, is the right thing to do. Hateful or not, Twitter also provides a means for the public to directly criticize the president, something everyone would lose if Trump wasn’t on there anymore. Still, seeing Jack Dorsey put Trump in his place with a Twitter ban might be too priceless a thing to pass up.

What happens when robots take our jobs?

Trump may have talked up securing and bringing back jobs to America, but the reality is that whether or not he meant it, many of the jobs he has promised to save won’t be around for much longer. A White House study published earlier this year forecasts an “83 percent chance that workers earning less than $20 per hour will lose their jobs to robots.”

Trump voters may say they aren’t worried about it, but they might be singing a different tune when they’re the ones who are replaced by Builder Bot 9000. What America and other countries will have to decide is how they want to take care of workers whose skills become obsolete as job automation becomes more prevalent. Do we hang them out to dry, or retrain them at no cost at public universities? Do we begin taking steps, as Elon Musk has suggested toward a universal basic income? You can almost hear the cries of “Socialism!” before they begin.

How much is too much privacy?

After the San Bernardino shooting, the FBI announced it had the perpetrator’s iPhone, but was unable to access it. Then, a national debate ensued about whether or not Apple should provide the FBI with a backdoor into the iPhone’s software. Apple, backed by privacy advocates, refused on the grounds that even creating such a backdoor would be dangerous, and open to exploitation by other parties. Plus, it would set a precedent wherein the government could potentially use that backdoor to unilaterally access any device in its possession. The FBI countered by appealing to national security. A good recap of the affair can be found here.

In the end, Apple held it’s ground and the FBI was actually able to access the phone without the company’s help. This put the immediate matter to bed, but left open numerous questions about how far technology privacy rights extend. Do they cover phones and other devices? Are they situational, subject to being suspended if national security is at risk? Eventually, these things will have to be legislated more concretely — 2017 might be the year we see it happen.

Was Peter Thiel wrong to demolish Gawker?

When it was announced that Gawker filed for bankruptcy thanks to Hulk Hogan’s massive lawsuit against the media company for publishing his sex tape, proponents of journalistic freedom became concerned. Firstly, such a huge amount of money awarded to Hogan was clearly an overzealous punishment for the offense. Secondly, Hogan’s lawsuit was funded by Peter Thiel whose personal beef with Gawker led him to seek the destruction of the online media outlet. The involvement of Thiel, a tech billionaire with some interesting projects under his belt, raised questions about how much influence the wealthy should be allowed to exert over the press. Nothing Thiel did was illegal, but it’s hard to argue that it wasn’t unethical.

Extinction as a weapon — should we use it?

If we could, say, introduce a genetic modification into a species of malaria-carrying mosquitoes, which would render the population all but extinct, should we ever do such a thing? Perhaps, surprisingly, this is no longer a hypothetical scenario from some science fiction flick. At Imperial College London, researchers have produced a gene drive (which is basically a “selfish” gene that forces its way into reproduction) that would make female mosquitoes of these species mostly infertile within a few generations. It would be a Children of Men situation, only in this case, it would be Children of Mosquitoes.

On the surface, this seems like a great idea. We won’t have to worry about curing malaria if its vehicle for spreading itself disappeared. Plus, keeping in mind that this would only affect specific organisms, who likes mosquitoes, anyway? But the ethical implications of this would extend well beyond malaria prevention. They are both positive and negative. According to the researchers, we could use gene drives to strengthen endangered species, or species that we want to see preserved. Conversely, they could weaken and destroy any species deemed unwanted. It would be the ultimate realization of playing God — and that kind of power alone is enough to frighten some.

There is also the potential for misuse. The MIT Technology Review [writes], “The FBI is looking into whether gene drives could be misused, say, to create a designer plague… Twenty-seven researchers wrote to Science with warnings against the accidental release of gene-drive organisms, something they fear would devastate public trust. Others have said the research ought to be classified, though it’s too late for that.” Whatever scientists and governments decide to do with these findings, humanity will soon have to confront the question of whether there are some scientific avenues that are best left untraveled.