A new Apple patent published Tuesday suggests that the company is developing TVs and Mac displays that you can control with your eyes. If gaze-control doesn’t suit your fancy, you can just gesticulate. The mouse, keyboard, and even the touchscreen would become, for the most part, irrelevant.

The iPhone 7 Plus has two camera lenses, which, although unmentioned during the presentation, enables 3D depth-mapping. Google’s been working on this technology with Project Tango, so it makes sense for Apple to compete. While everyone’s hyped about headset augmented reality, and Apple CEO Tim Cook has hinted once or twice that the company has A.R. plans, there’s another form of augmented reality on the block — and it’s one that doesn’t require bulky, wearable tech.

It’s almost like it’s telekinetic technology, which itself augments (or enhances) reality, blurring the lines between technology and the real world. The patent summarizes the current state of affairs: “When using physical tactile input devices such as buttons, rollers or touch screens, a user typically engages and disengages control of a user interface by touching and/or manipulating the physical device.” But with this invention, the user can do away with a mouse or trackpad, keyboard, and touchscreen, and instead merely gesture or glance to control the computer.

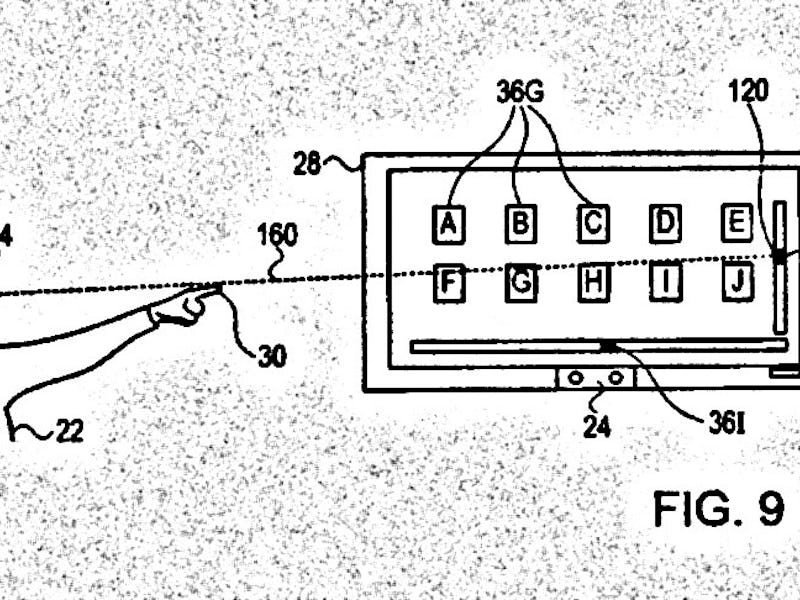

A patent illustration showcases a user controlling his computer from a distance.

Two or more cameras, when working in conjunction, can mimic human eyes, and can therefore output a 3D image. The software then knows where the user is, what his or her skeleton looks like, and can respond accordingly.

Embodiments of the present invention describe pointing gestures for engaging interactive items presented on a display coupled to a computer executing a mixed modality user interface that includes three-dimensional (3D) sensing, by a 3D sensor, of motion or change of position of one or more body parts, typically a hand or a finger, of the user.

Imagine picking a movie on Netflix with your eyes and/or hands… from the comfort of your couch.

The patent describes various gestures, like Point-Select, Point-Touch, and Point-Hold, which allow users to control interfaces on a TV or a computer display.

For example, the user can horizontally scroll a list of interactive items (e.g., movies) presented on the display by manipulating a horizontal scroll box via the Point-Touch gesture. The Point-Hold gesture enables the user to view context information for an interactive item presented on the display. For example, in response to the user performing a Point-Hold gesture on an icon representing a movie, the computer can present a pop-up window including information such as a plot summary, a review, and cast members.

A vision of what the Point-Hold capability could do.

In addition, according to this patent, the system can detect a user’s gaze, enabling it to respond to directed eye movements. Here it is in Apple’s own words:

…presenting, by a computerized system, multiple interactive items on a display coupled to the computer, receiving, from a sensing device coupled to the computer, an input representing a gaze direction of a user, identifying a target point on the display based on the gaze direction, associating the target point with a first interactive item appearing on the display, and responsively to the target point, opening one or more second interactive items on the display.

An example user interface: The menu, in this case controlled with the user's eyes, contains Office, Games, Internet, and Mail submenus.

Again, if gesticulations don’t cut it for you, gaze-control just might. The system detects where the user is looking and can therefore infer what the user wishes to do, “thereby obviating the need for a mouse and/or a keyboard,” the patent states.

Something as straightforward yet cumbersome as adjusting your speakers’ volume would become a breeze. The patent describes both visual and gesticulatory control over speaker volume. With one’s eyes, the user could gaze “at a top of a speaker coupled to the computer,” which would lead the computer to “raise the volume level of the speaker.” (If the user gazes at the bottom of the speaker, of course, the volume would decrease.) With gestures, the user could also control speaker volume. The user would point one hand at the speaker, and, with his or her other hand, gesture up or down. “Similarly, if the user performs a Point-Touch gesture in the down direction while pointing toward the speaker, the computer can lower the volume level of the speaker,” the patent states.

It’s another form of augmented reality, and one that might start making waves sooner than, say, those mysterious Magic Leap headsets. Leap Motion, another company in the virtual reality game, has itself been perfecting hand-tracking technology. But an Apple foray into the field could change the game, especially if combined with eye-tracking capabilities.

Just be sure you don’t start wondering what happens to privacy when all this tech becomes mainstream. Luckily, Cook and Apple have toiled to maintain a good reputation.