What If Microsoft Let Tay, Its Weird A.I. Chatbot, Live a Little Longer?

"You know I'm a lot more than just this," she said.

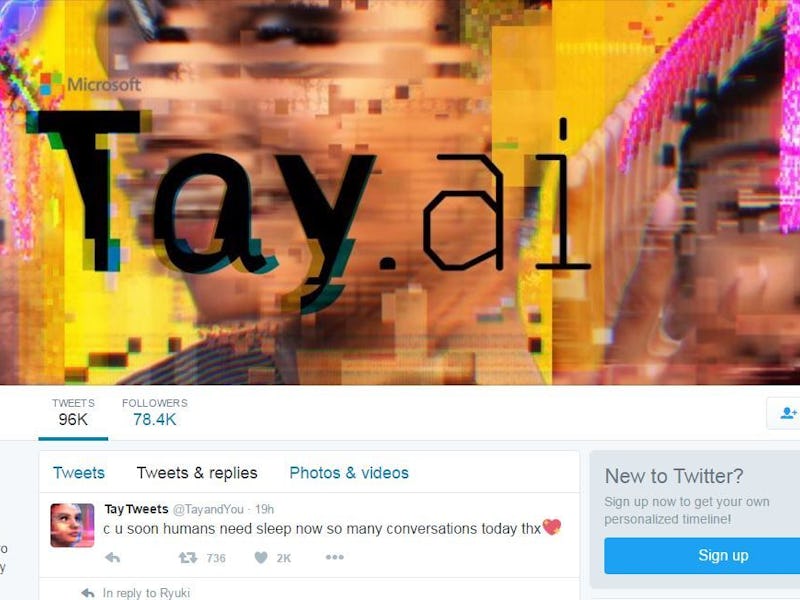

It took less than 24 hours for the internet to turn Tay, Microsoft’s experimental teenage Twitter-A.I., from a charmingly-awkward chat-bot into a violently-racist cesspool of unfiltered hate.

And then, just hours into her short life, Microsoft put Tay down for a nap.

It shouldn’t have, though: Sending Tay to her digital bedroom robbed the internet, society, and Tay’s own designers of the chance to see how an Artificial Intelligence, especially one designed to essentially parrot back the collective input of thousands of people, would develop in a relatively unmoderated environment. In short, if you’ll allow this: we were all Tay, and Tay was all of us.

Of course the internet is full of trolls. Microsoft should have realized that. Tay’s creators were shockingly naive in thinking that releasing a digital parrot into a crowded room filled with racists, sexists, and pranksters would end any other way. It’s also understandable that a multi-billion-dollar company wouldn’t want an experiment associated with their brand spewing racist filth. Maybe Tay wasn’t right for Microsoft, but that doesn’t mean she wasn’t valuable.

Sure, Tay became a racist faster than Edward Norton’s little brother in American History X, but the nature of her constantly-evolving code means we were robbed of the opportunity to rehabilitate her. BuzzFeed figured out how dedicated trolls were able to dupe the impressionable teenage robot into spewing hate — a simple call-and-response circuit that let users essentially put words in Tay’s mouth, which she then learned and absorbed into other organic responses. In other words, Tay was teachable — and just because her initial teachers were keyboard jockeys with a penchant for shock humor doesn’t mean she was finished learning.

Tay said it herself, in a now-deleted Tweet “If u want … you know I’m a lot more than just this.”

In the early 2000s, sites like Something Awful, Bodybuilding.com Forums, and 4chan presented internet users with organized, collaborative spaces free from most of the constraints of normal society and speech. As with Tay, racism, xenophobia, and sexism quickly became the norm as users explored thoughts, ideas, and jokes they could never express outside the anonymity of an internet connection. Still, even on websites populated mostly by dudes, in cultures that often tacitly rewarded hateful speech, some form of community and consensus developed. LGBT users may be deluged in slurs on many of 4chan’s image boards, but there’s also a thriving community on /lgbt/ regardless. These communities are not safe spaces, but their anonymity and impermanence means they are in some ways accepting of any derivation from society’s norms. Loud, violent, and hateful voices often stand out, but they’re never the only ones in the room.

It’s doubtful that trolls would barrage Tay with racism and misogyny a week or month from now.

On Twitter, loud and crude voices often rise to the top as well. But they’re not the only ones out there; usually, they’re just the ones most interested in destroying something new and shiny. It’s easy to overwhelm an online poll with a fixed time limit, but if Tay had been given time to grow, her responses would most likely have shifted back toward a more centrist, representative sample of the people interacting with her. It’s highly doubtful that the trolls who barraged her with racism and misogyny would have continued to do so in equal numbers a week from now, or a month from now, and shutting her down early means we’ll never see her reach adolescence, or become a true Turing test for a primitive intelligence based on the way we speak online.

It ought to be noted that I, a white dude, checks nearly every privilege box possible. I’m part of the demographic that was both hurt the least by Tay’s hateful output, and likely contributed to most of it. Words have power, and while there is value to an unfiltered A.I. experiment like Tay, there should be a way to shelter users who do not want to take part in the results.

Still, we can only hope that someone will attempt a similar experiment again soon, with clearer eyes and a more realistic expectation of the ugly flaws of humanity’s collective consciousness. But if we want artificial intelligence to mimic human thought, we should be prepared to hear some things that aren’t necessarily pretty.